GCCauses and Appearance of Noise in Seismic Data Volumes*

Satinder Chopra¹ and Kurt J. Marfurt²

Search and Discovery Article #41476 (2014)

Posted November 3, 2014

*Adapted from the Geophysical Corner column, prepared by the authors, in AAPG Explorer, October, 2014. Editor of Geophysical Corner is Satinder Chopra ([email protected]). Managing Editor of AAPG Explorer is Vern Stefanic.

¹Arcis Seismic Solutions, TGS, Calgary, Canada ([email protected])

²University of Oklahoma, Norman, Oklahoma

Seismic data are usually contaminated with noise, which refers to any unwanted features in the data. These unwanted features may actually be somebody else's signal, such as converted waves in what we think of as "P-wave" data – but more commonly, these unwanted "noise" features provide little or no information about the subsurface, and are referred to as random noise and coherent noise. Examples of random noise include wave action in a marine environment, wind and vehicle traffic in a land environment, and electronic instrument noise in both environments.

There are two types of coherent noise:

On processed data, noise that looks random in time may be highly organized in space – such as acquisition footprint, which is highly correlated to the acquisition geometry. The least ambiguous but most difficult to address type of "noise" is the total absence of signal, such as dead traces and lower-fold areas corresponding to the unrecorded offsets and azimuths. Whatever their cause, all these types of seismic noise can result in significant artifacts that may negatively impact subsequent interpretation products, from simple structural and spectral attributes through prestack impedance inversion, to AVAz analysis.

|

♦General statement ♦Figures ♦Suppression of noise ♦Examples ♦Conclusions

♦General statement ♦Figures ♦Suppression of noise ♦Examples ♦Conclusions

♦General statement ♦Figures ♦Suppression of noise ♦Examples ♦Conclusions

♦General statement ♦Figures ♦Suppression of noise ♦Examples ♦Conclusions

♦General statement ♦Figures ♦Suppression of noise ♦Examples ♦Conclusions

♦General statement ♦Figures ♦Suppression of noise ♦Examples ♦Conclusions

♦General statement ♦Figures ♦Suppression of noise ♦Examples ♦Conclusions

♦General statement ♦Figures ♦Suppression of noise ♦Examples ♦Conclusions

♦General statement ♦Figures ♦Suppression of noise ♦Examples ♦Conclusions

♦General statement ♦Figures ♦Suppression of noise ♦Examples ♦Conclusions

♦General statement ♦Figures ♦Suppression of noise ♦Examples ♦Conclusions |

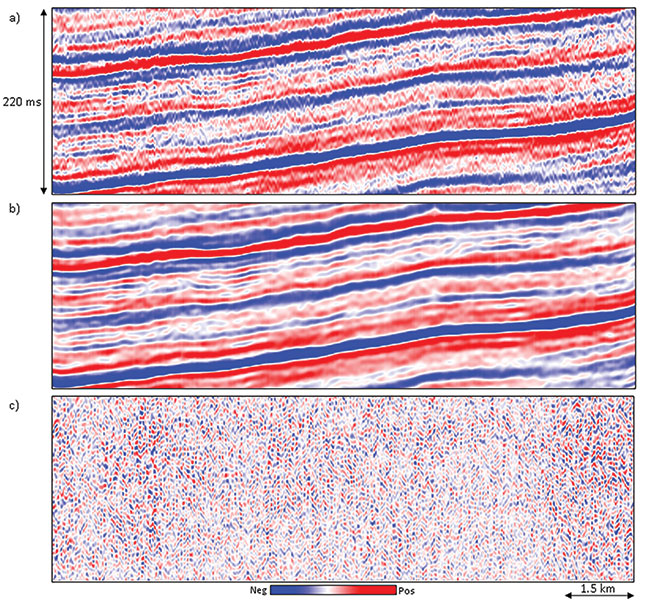

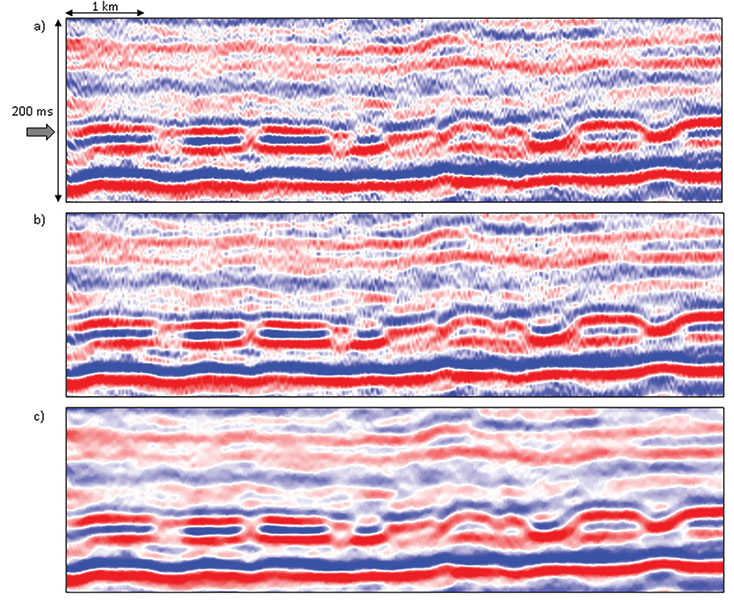

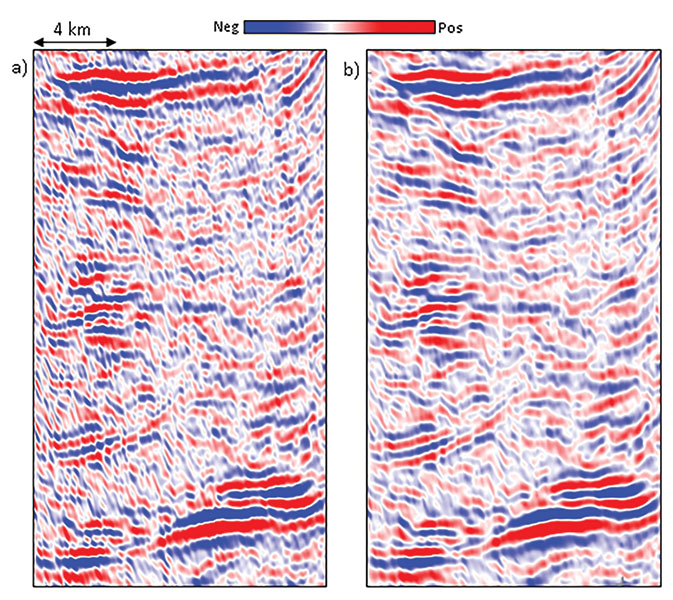

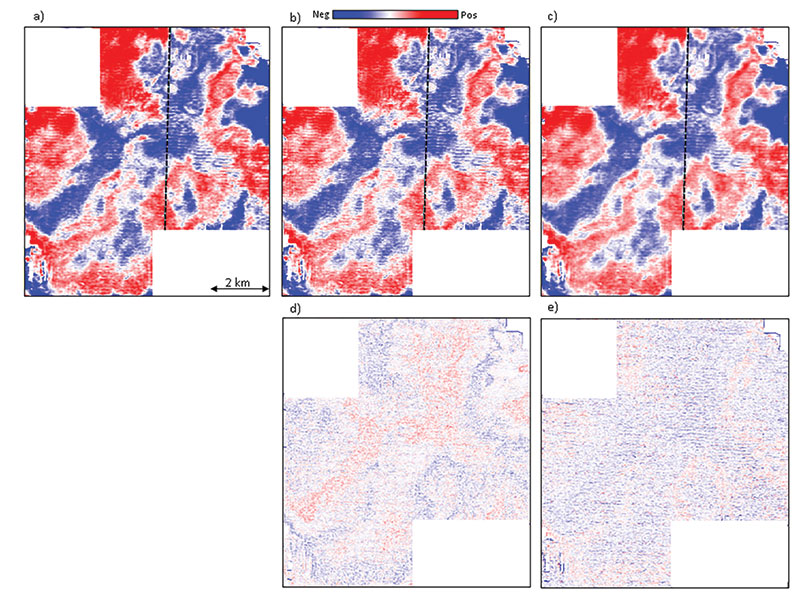

Of all the types of noise, random noise – or coherent noise that appears random – is the easiest to suppress. The mean filter is the simplest and most familiar noise suppression filter. These In contrast, a structure-oriented median filter not only suppresses random noise, but will preserve lateral reflector discontinuities. The median filter picks up samples within the chosen aperture along the local dip and azimuth and replaces the amplitude of the central sample position with the median value of the amplitudes. Principal component Mathematically, the principal component generates a five-sample pattern that best represents the lateral variation in amplitude along the 2K+1 slices. In the absence of high amplitude artifacts in the data in general, the principal component filter accurately preserves lateral changes in seismic amplitude and rejects noise. All of these The simplest way to preserve edges is to simply compute the location of the edges using a coherence or Sobel filter algorithm sensitive to discontinuities. The desired filter is then applied only to those areas where the coherence falls above some user-defined value. A slightly more complicated way to preserve edges is to evaluate the standard deviation (or alternatively, the coherence) in a suite of overlapping windows that include the analysis point. Then the mean, median, principal component or other filter is computed in the window with the smallest standard deviation or coherence and mapped to the desired sample. We show the application of a principal component structure-oriented filtering to a data volume through a representative seismic section in Figure 1. The input data in Figure 1a shows good reflectors with subtle cross-cutting noise. The filtered section (Figure 1b) exhibits improved event continuity and preserved amplitude. To ensure that no useful reflection detail is lost in the filtering process, we take the difference volume and examine it. As seen in Figure 1c, there are no reflection events that have been rejected. Instead, we see random noise as well as inclined broken noise patterns. This steeply dipping noise is common to most seismic data volumes and is associated with the migration of shallow reflections, diffractions and coherent noise that have been insufficiently sampled, or aliased, in the Modern "high density" acquisition directly addresses these sampling problems and results in superior images for the interpreter. Structure-oriented filtering is widely used in the industry and has also found its way into most commercial workstation interpretation software packages. It usually works fine in most cases, and so the interpreters tend to use it all the time, irrespective of the quality of the input seismic data. We wish to elaborate on this aspect and emphasize that suppression of noise should be done carefully, only after examining the quality of the data. Parameters can be important. In general, one should avoid running In this example, the edge-preserving, structure-oriented filtering was run with the default parameters in a popular commercial seismic interpretation package. These default parameters result in smoothing not only along dip, but also perpendicular to dip, thereby acting as a low pass filter. One should always examine the rejected noise by computing the difference between the input and output as shown in Figure 1c and Figure 2c. In Figure 3a, we show a small segment of a seismic section close to the edge of the survey. The data at the edge of the survey to the right side of the display has migration smiles. Seismic migration takes each sample of the input data and maps it to a 3-D ellipsoid in the output data. If the sampling of the surface data is sufficiently dense, these smiles constructively interfere along reflectors and diffractors and destructively interfere elsewhere, thereby forming the migrated image. If the surface data are coarsely sampled, the steeper limbs of the smiles fail to destructively interfere resulting in the steeply dipping artifacts seen in Figure 1 and Figure 2. If the data goes abruptly to zero, such as at the edge of a survey or in a no-permit zone, there are no additional smiles to destructively interfere, leaving the edge effects seen in Figure 3. High amplitude spikes present in the data also generate smiles, which appear as a number of small amplitude bursts scattered throughout the section in a random way. This is clearly seen on the zoom of a small portion of the section shown in Figure 3b. When such amplitude bursts, or spikes are randomly present in the data, principal component structure-oriented filtering may not be the best way to enhance S/N ratio. In Figure 4a we show a segment of a section from seismic data that has a significant distribution of high amplitude noise bursts distributed in a random manner. The principal component structure-oriented filter application is shown in Figure 4b. Notice the amplitude bursts have been toned down somewhat after the filter application, but have not been entirely suppressed. A similar application of median filtering to the same data shown in Figure 4c demonstrates the complete suppression of the noise bursts. By construction, the principal component filter generates a In the extreme case where one of the traces is a high amplitude spike, the most energetic pattern will be the value 1.0 at the spike trace location and zero at the other locations. Counterintuitively, the principal component filter in this case will preserve the noise and reject the signal. The data in Figure 4 are not quite this bad, but have sufficiently high amplitude noise that it contaminates the pattern. In contrast, the non-linear median filter is constructed to reject anomalously strong negative and positive spikes, resulting in the improved image in Figure 4c. The coherence attribute using energy ratio algorithm was computed from the input and the two filtered outputs in Figure 4, and their comparison is shown in Figure 5. Notice the sharp definition of the features see on the slices after median filtering as compared with the other two. Dipping Noise Steeply dipping noise, sometimes due to shallow backscattered ground roll can also riddle seismic data. If left in the data, this noise will create artificial patterns on the computed attributes. This noise can be suppressed with dip Acquisition Footprint Acquisition footprint refers to linear In land data, acquisition footprint often results in seismic data when the offset and azimuth distribution varies from CMP bin to CMP bin. In marine data, repeatable variations in offset and azimuths often occur due to cable feathering. Spatially periodic changes in offset and azimuth give rise to spatially periodic variations in the stacked data, sometimes from AVO and AVAz effects, but more often from subtle errors in velocities that result in a different stack array response. If the pattern is vertically consistent, and has a similar wavelet to neighboring traces, principal component structure-oriented filtering will consider this consistent amplitude pattern to be signal, not noise, and preserve it. In Figure 7 we show the application of both principal component and median One way to suppress the footprint is to first analyze the pattern in the kx-ky wavenumber domain, and then The equivalent display from the most positive curvature (long-wavelength) computed on the footprint-filtered version of the seismic data is shown in Figure 8c. Notice the absence of the north-south footprint striations. Regularization of Seismic Data with 5-D Interpolation Seismic attributes computed from sub-optimally sampled seismic data or data with missing traces give rise to artifacts. The ideal way to have optimally sampled seismic data is to have an optimal shooting geometry followed through in the field. Practical considerations, however, usually yield seismic data that have missing traces, large data gaps or a non-uniform distribution of offsets and azimuths in the bins. In principle, one might correct for or fill in the missing data gaps by reshooting the data in those areas. In practice, such infill acquisition can be extremely expensive, and is avoided. The second best approach is to handle the missing data problem in the processing center. Originally, single or a local few missing data traces can be handled by copying adjacent traces to into the CMP bin. Such simplistic methods were superseded by 2-D and later 3-D triangular trace interpolation methods. All these methods use the local data to predict the missing data and so are called local methods. They do have a limitation in that they cannot handle large data gaps. In the last decade or so, global methods for data interpolation have evolved that use more of the available data to populate the missing data. These methods are multidimensional instead of one-, two- or three-dimensional, operating simultaneously in as many as five different As might be expected, these methods are compute intensive and have longer runtimes than the local methods. Such 5-D interpolation methods regularize the offset and azimuth distribution in bins, and hence the simulated acquisition geometry of the seismic data. In doing so they address the root cause of the missing data, and subsequent footprint artifacts. In Figure 9 we show chair displays with seismic amplitude as the vertical sections and coherence as the horizontal sections, before and after 5-D interpolation. Notice the missing traces in the seismic before 5-D interpolation are all predicted nicely and the reflections looks more coherent. Similarly, the speckled pattern corresponding to the missing traces on the coherence volume before 5-D interpolation is gone, and the coherence display is amenable to much better interpretation after 5-D interpolation. In Figure 10a we show time slices at t=158 ms, where the acquisition footprint appears prominently on the coherence attribute as striations in the NE-SW direction, masking the reflection detail behind them. Figure 10b shows the equivalent coherence slice after 5-D regularization, exhibiting considerable improvement in data quality. Similarly, cleaner and clearer curvature displays are derived from data after 5-D interpolation and resulting in more confident interpretation, as shown in Figure 10c to 10f. Seismic data usually suffer from different types of noise. Random noise is the easiest to recognize and the easiest to address. Coherent noise such as acquisition footprint can be more challenging and result in coherent artifacts on seismic attribute displays that can mask features of interpretation interest. We have emphasized the importance of conditioning the data in terms of noise filtering as well as regularizing the data with 5-D interpolation. We have suggested that the input data should first be examined carefully to understand the type of noise contaminating them, and then choosing an appropriate method of filtering. Random noise may be handled using principal component structure-oriented Inclined coherent noise can be handled with dip filtering. Acquisition footprint or missing data issues arising out of non-uniformity in the geometry of the seismic data could be handled with 5-D interpolation. Once such problems are diagnosed and handled for the input seismic data, seismic attributes computed on them would definitely be more meaningful, would display better and thus lead to more accurate interpretations. |